From vision to hand action

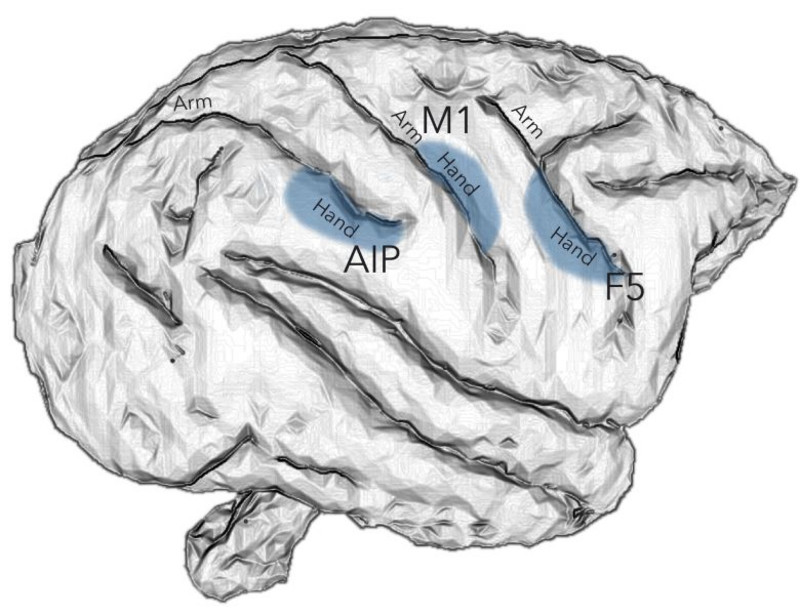

Our hands are highly developed grasping organs that are in continuous use. Long before we stir our first cup of coffee in the morning, our hands have executed a multitude of grasps. Directing a pen between our thumb and index finger over a piece of paper with absolute precision appears as easy as catching a ball or operating a doorknob. The neuroscientists Stefan Schaffelhofer and Hansjörg Scherberger of the German Primate Center (DPZ) have studied how the brain controls the different grasping movements. In their research with rhesus macaques, it was found that the three brain areas AIP, F5 and M1 that are responsible for planning and executing hand movements, perform different tasks within their neural network. The AIP area is mainly responsible for processing visual features of objects, such as their size and shape. This optical information is translated into motor commands in the F5 area. The M1 area is ultimately responsible for turning this motor commands into actions. The results of the study contribute to the development of neuroprosthetics that should help paralyzed patients to regain their hand functions (eLife, 2016).

The three brain areas AIP, F5 and M1 lay in the cerebral cortex and form a neural network responsible for translating visual properties of an object into a corresponding hand movement. Until now, the details of how this “visuomotor transformation” are performed have been unclear. During the course of his PhD thesis at the German Primate Center, neuroscientist Stefan Schaffelhofer intensively studied the neural mechanisms that control grasping movements. "We wanted to find out how and where visual information about grasped objects, for example their shape or size, and motor characteristics of the hand, like the strength and type of a grip, are processed in the different grasp-related areas of the brain", says Schaffelhofer.

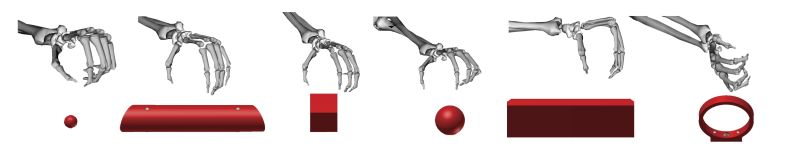

For this, two rhesus macaques were trained to repeatedly grasp 50 different objects. At the same time, the activity of hundreds of nerve cells was measured with so-called microelectrode arrays. In order to compare the applied grip types with the neural signals, the monkeys wore an electromagnetic data glove that recorded all the finger and hand movements. The experimental setup was designed to individually observe the phases of the visuomotor transformation in the brain, namely the processing of visual object properties, the motion planning and execution. For this, the scientists developed a delayed grasping task. In order for the monkey to see the object, it was briefly lit before the start of the grasping movement. The subsequent movement took place in the dark with a short delay. In this way, visual and motor signals of neurons could be examined separately.

The results show that the AIP area is primarily responsible for the processing of visual object features. “The neurons mainly respond to the three-dimensional shape of different objects”, says Stefan Schaffelhofer. “Due to the different activity of the neurons, we could precisely distinguish as to whether the monkeys had seen a sphere, cube or cylinder. Even abstract object shapes could be differentiated based on the observed cell activity.”

In contrast to AIP, area F5 and M1 did not represent object geometries, but the corresponding hand configurations used to grasp the objects. The information of F5 and M1 neurons indicated a strong resemblance to the hand movements recorded with the data glove. “In our study we were able to show where and how visual properties of objects are converted into corresponding movement commands”, says Stefan Schaffelhofer. “In this process, the F5 area plays a central role in visuomotor transformation. Its neurons receive direct visual object information from AIP and can translate the signals into motor plans that are then executed in M1. Thus, area F5 has contact to both, the visual and motor part of the brain.”

Knowledge of how to control grasp movements is essential for the development of neuronal hand prosthetics. “In paraplegic patients, the connection between the brain and limbs is no longer functional. Neural interfaces can replace this functionality”, says Hansjörg Scherberger, head of the Neurobiology Laboratory at the DPZ. “They can read the motor signals in the brain and use them for prosthetic control. In order to program these interfaces properly, it is crucial to know how and where our brain controls the grasping movements”. The findings of this study will facilitate to new neuroprosthetic applications that can selectively process the areas’ individual information in order to improve their usability and accuracy.

Original publication

Schaffelhofer, S., Scherberger, H. (2016): Object vision to hand action in macaque parietal, motor and premotor cortices. eLife, DOI: http://dx.doi.org/10.7554/eLife.15278

Video

The video "From vision to hand action" shows details of the research: https://youtu.be/NJxe4kGeyOs